Preparing Your Systems

Let’s start with the basics. These best practices are going to be essential in order for your AI agents to navigate your system effectively. Let’s run through them.

- Naming Conventions: AI agents read program names, folder names, and asset names to understand context. If you have programs named “Test_v3_Final_REALLY_FINAL,” the AI won’t know what to do with them.

- Templatization: Create consistent templates for each program type. AI agents need patterns to identify and follow.

- Tokenization: The more you use tokens instead of hard-coded values, the more an AI agent can help you. Tokens are variable placeholders that AI can understand and manipulate.

- Data Hygiene: Clean up duplicates, standardize values, and maintain field integrity. AI agents will perpetuate bad data if that’s what they find.

- Clear Descriptions: Use description fields everywhere. On programs, smart campaigns, and assets. AI agents can read these much faster than humans can, and they provide critical context.

It’s also very important to make it crystal clear when something is legacy content.

You can achieve this by doing things like:

- Create an “Archive” or “Legacy” folder

- Add “Z_” to the beginning of old program names

- Use description fields to mark deprecated templates

If you don’t clearly mark legacy content, your AI agents might start using outdated templates or get trapped following obsolete processes.

Creating Solid Documentation

With those best practices in place, let’s move on to documentation. This is going to be your AI agent’s training manual. This is a crucial step that will help your agents learn your unique system (just as you would train any new teammate coming on board).

#1 Process Steps

Explain each process as clearly as possible, step-by-step. Remember: You’ve probably looked at countless marketing automation instances in your career, and none of them follow the exact same process. They all have peculiarities that AI agents won’t magically understand. Specific documentation fixes this.

#2 Unspoken Rules

These are the “tribal knowledge” items that exist in our heads as a result of our experience and interactions with other folks at your company or in the industry. They are important rules, but ones that AI will never be able to intuit. Be sure to document as many of these as you can.

These could cover a wide array of things, for example:

- “We always clone that one program from 2014 for this specific use case”.

- “For partner webinars, use the Partner template, not the standard template”.

- “If the campaign name contains ‘Exec’, it goes in the Executive Events folder”.

- “We never use the green email template because it has formatting issues”.

#3 Make it AI-Friendly

AI models struggle with negative instructions. Meaning, instead of “Do not do X”, write it as “Instead of X, do Y”.

Here’s a real-world example to illustrate this further.

Bad: “Do not use the blue template for executive events”. Good: “For executive events, use the gold template instead of the blue template”.

And make sure you use explicit instructions when dealing with AI. Be specific about what should happen, not just what shouldn’t. The more explicit you are, the less room for erroneous interpretation there is.

#4 Get Video Transcriptions

A lot of internal documentation exists as videos or recorded training sessions. AI agents can’t watch videos (yet), but they can definitely process transcripts and screenshots.

Go through your resources and transcribe training videos (several AI services can do this for you. We like Descript), extract key screenshots, and convert visual walkthroughs to written steps.

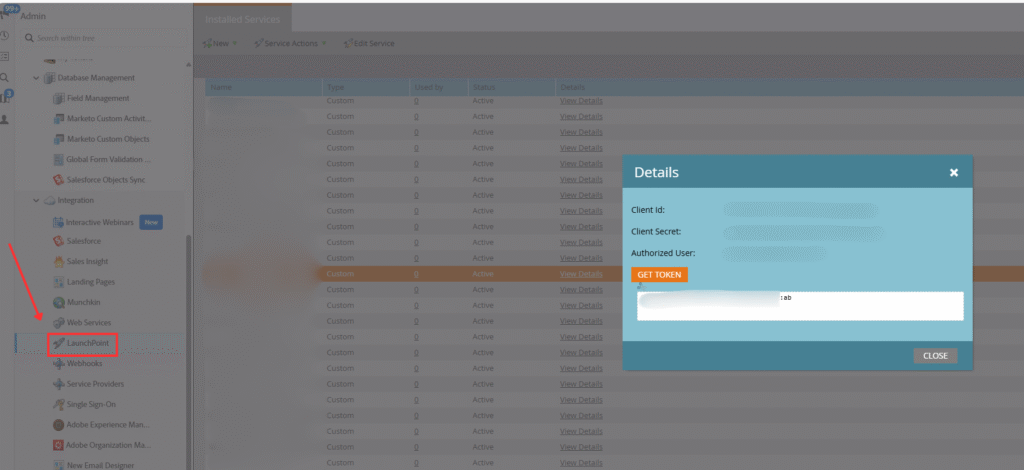

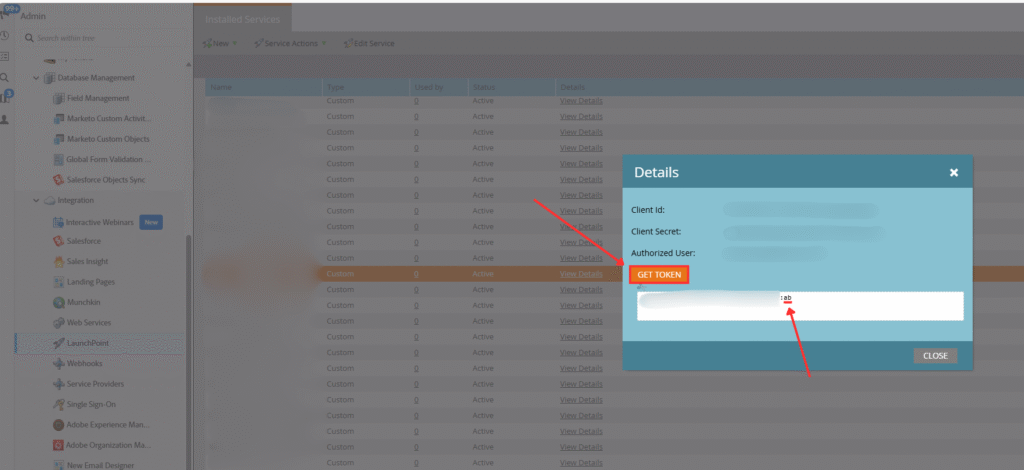

#5 Document API Steps

Now we’re getting into some more advanced stuff, but it’s incredibly helpful for AI agents to have. Go ahead and document the specific API calls needed for each of your processes.

For example, this may look something like:

To update program tokens:

- POST to /rest/asset/v1/program/{id}/token.json

- Required parameters:

- name: token name

- value: new value

- type: text/rich text/score/date

- Authentication: Bearer token in header

- Expected response: 200 OK with updated token details

AI training becomes much easier (and far more effective) when you have this level of documentation to feed it.

Preparing Your Team

(and Yourself)

We alluded to this in the intro: it’s really important for you and your team to calibrate expectations and prepare for what the introduction of AI agents actually means for both day-to-day work and long-term progress. Here are some important points that will get everyone aligned.

#1 Adjust Expectations

There’s a lot of hype out there surrounding what AI can do. And while AI can have a massive positive impact on productivity, it won’t happen instantly. Many think that as soon as AI agents are turned on, they will start building all your campaigns the very next day.

The reality is, every new technology requires process redesign and learning. In fact, productivity will likely decrease during this adjustment period before it increases.

Why is that? When you implement AI agents, several things start happening:

- Your team needs to learn new workflows

- You’ll discover edge cases and limitations

- Processes need adjustment (for example, humans still need to update smart campaigns in Marketo)

- There’s a learning curve in knowing when to use AI vs. doing it manually (very important)

This is all normal. Give your team a week or two to adapt, and set expectations with your managers and stakeholders about this adjustment period.

#2 Understand the Impact by Seniority

It’s also worth noting that AI productivity gains are not evenly distributed. Due to the nature of the tasks that AI is currently most adept at, the impact will vary by role. We can break this down into a few buckets based on seniority.

- Junior Employees: Experience the highest productivity increase. AI agents help with basic tasks they’re still learning, allowing them to work faster and with more confidence.

- Senior Employees: Experience lower (but still positive) productivity increase. They already know how to do things efficiently, so AI is more of an enhancement than a total transformation.

#3 Choose Your Collaboration Model

Now, let’s quickly go through the two main models we can use to define our human-AI collaboration system. These will help us structure our processes and specify where productivity gains can actually happen. We first heard about these models from author and Wharton School professor, Ethan Mollick, when he wrote about the models (and a concept called “The Jagged Frontier” which we’ll touch on shortly) in an article here.

The Centaur Model

This is named after the mythical creature that’s half human and half horse. The Centaur model defines clear boundaries between:

And we never cross into each other’s territory.

For example: AI clones the program and updates tokens → Human reviews, updates smart campaigns, and does QA. There are clear handoff points.

This model is best for structured processes with clear steps that can be divided between human and AI capabilities.

The Cyborg Model

Conversely, like a cyborg that seamlessly blends human and machine, this model has no clear boundaries. You’re constantly experimenting with things like:

- What can be handed off to AI?

- Should I write this specific code or ask AI to write it?

- Now that AI wrote it, should I refine it?

For example: You ask AI to write data analysis code, but you review and rewrite the statistics portions because you know AI can mess up statistical calculations.

This model is best for creative work, complex analysis, and scenarios where you’re still discovering the capabilities of your AI agents.

These models are more of a guide than a strict process. Most will gravitate more towards one or the other, depending on how they’re using AI. There’s no right answer. Try each for different scenarios and see what works best for you.

Two Critical Traps to Avoid

With all of that in mind, we want to leave you with two significant pitfalls to watch out for as your team adopts AI agents.

Trap 1: The Jagged Frontier

As we know by now, AI intelligence works completely differently from human intelligence. It can do certain complex tasks with incredibly impressive speed, but then fail something as simple as counting the “R’s” in “Strawberry”.

This inconsistency in AI capabilities is known as the “Jagged Frontier”, which we first heard from Ethan Mollick.

Think of AI capabilities as uneven, inconsistent, and “jagged”, where it excels at complex tasks but struggles with some simple ones.

Why does this matter? We have to challenge our assumptions about what AI actually can or can’t do. We may think that a simple task is easily achievable by AI, but then it struggles. And when we try to use our agents for tasks they can’t handle, productivity will inevitably tank. You could run into hallucinations, errors, broken campaigns, and so on.

To overcome this, we need to:

- Continuously map the jagged frontier: Keep testing what AI can and can’t do

- Don’t assume: Task difficulty for humans ≠ task difficulty for AI

- Share learnings: When you discover a capability or limitation, document it for your team

- Fail safely: Test AI outputs in non-critical scenarios first

You can read Ethan’s blog on this here, as well as the full study here.

Trap 2: Automation Bias

This second trap is perhaps the more dangerous one. And we have a fascinating real-world example that illustrates how it works (known as the Paris subway story).

In short, Paris introduced semi-automated subway lines that were partially controlled by AI and partially by humans. The results were:

- Fully automated lines: Very few accidents

- Human-operated lines: Very few accidents

- Semi-automated lines: Significantly MORE accidents

Why were the semi-automated lines seeing more accidents? Because when humans see a machine doing something right 99% of the time, they stop paying attention. They assume it will be right 100% of the time. So when that 1% error occurs, humans aren’t ready to catch it.

In other words, we’re generally pretty bad at being “the human in the loop”.

So in MOPs terms, if your AI Agent creates a campaign correctly 99 times, you’ll likely stop carefully reviewing the 100th+ one. But this is exactly where the error could slip through (maybe there’s a wrong token, broken link, incorrect audience, etc.).

We have to make sure that automation bias doesn’t make us complacent. We can’t trust machines too much.

To stay on top of this, follow systematic quality controls rather than solely relying on human review.

This could mean cross-checking with other LLMs (Have a second AI model review the first one’s work for obvious errors), as well as other systems like the ones listed below.

Automated quality measurements:

- Track campaign completion rates

- Monitor form submission rates

- Check email deliverability scores

- Alert on suspicious patterns

Statistical monitoring:

- Compare performance to historical averages

- Flag outliers automatically

- Investigate when metrics drop below thresholds

The goal is to create AI safety nets that don’t rely solely on vigilant humans trying to catch every mistake.